If there is one takeaway from the workforce transformation over the past several years, it is that employees put a high value on choice: how we work, when we work, where we work. Flexibility is no longer a luxury but a foundational need in the evolving workplace.

The desire for choice extends to how we collaborate remotely versus in the room. Recently, AI-driven intelligence and room automation have come a long way to provide better visibility and participation for the far end participants. Q-SYS VisionSuite AI-based presenter tracking and camera switching stands as a shining example of leveraging technology to curate the visuals between in-room presenters and participants.

One of the benefits of being in the room is having the choice to watch what interests you most. Often times, when you are in the room, your ability to have a passing glance at unspoken audience reactions provides powerful context and ultimately keeps you more engaged. But the far end participants are still locked into whichever view the near-end technology chooses to send and are thus deprived of the ability to seek additional context. Wouldn’t it be great to see more within the room and choose what to focus on?

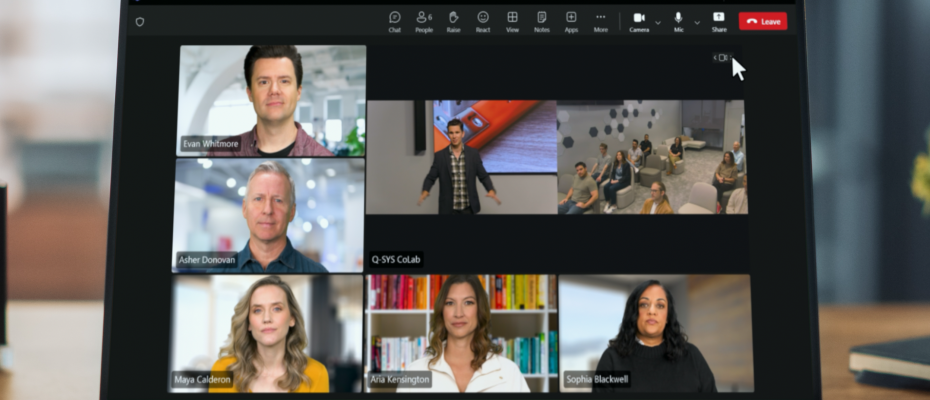

Microsoft’s multiple camera view capability within Microsoft Teams Rooms helps to solve this problem by offering up to four simultaneous camera streams from a single room in the gallery view. A far-end participant also has the choice to view all of the feeds at once or to focus on just one of them.

By pairing Q-SYS VisionSuite with Microsoft Teams’ multiple camera view you can further enhance the far end participant experience. Most camera streams provide static shots, but with Q-SYS VisionSuite you can provide dynamic shots of what is happening in the room.

For example, in an all hands meeting or learning space, you may have Microsoft’s multiple camera view capability configured with a presenter stream and an audience stream. Q-SYS VisionSuite adds AI-based tracking on the presenter stream so they can move freely around the stage and engage with the audience all while being framed appropriately for the far end viewer. VisionSuite can also automatically switch to close-up views of audience members when they ask a question so far end viewers can see who is asking the question instead of a wide shot.

Best of all, far end participants have the ability to see both audience members and presenter reactions at the same time with Q-SYS VisionSuite. And that experience is enhanced on-demand by the viewer’s ability to choose what to focus on at any given moment, resulting in greater engagement and elevating hybrid experiences.

Ready to learn more? Check out our eBook on offering deeper collaboration in a variety of high-impact spaces with advanced features from Q-SYS und Microsoft Teams.

Vic Bhagat

Neueste Artikel von Vic Bhagat (alle ansehen)

- Q-SYS Reflect Trial Now Available on the Microsoft Marketplace - November 3, 2025

- Q-SYS and Logitech Deliver Google Meet Room Solutions for High-Impact Spaces - April 7, 2025

- Make Your Choice: Q-SYS VisionSuite with Microsoft Teams Multiple Camera View - January 31, 2025